In 2017 I led the migration from our legacy hosting environment to Amazon’s Cloud Services.

The growth in Concept3D’s platform made it necessary to migrate from our original server environment to a fully scalable cloud based architecture: from managing a rack of servers, planning for maintenance windows, capacity planning for events...these tasks were far too stressful than they needed to be. Simultaneously, Concept3D was seeing a tremendous growth in our customer base, and, as the size of the clients using our platform increased, as well as the adoption of our platform by mobile users, it was clear that we needed to move our platform into the cloud.

There are two main aspects to moving your legacy code into a cloud environment: making the necessary changes to your software to adapt to the cloud, and modifying the servers to be scalable. The goal for our migration to the cloud was to keep the software changes to a minimum and put most of the burden of change in our server configurations. The main reason for this is that we had new products to develop, and we could not afford the time to perform a wholesale refactor of our application. Amazon’s platform is tremendously helpful in this regard, as they have taken many of the components of a server and developed products that scale for you.

Architect a Scalable Solution

The goals for the system were large, but the main areas were to be able to perform scaling on demand for our different applications and API’s, increase security, reduce outages, ease the management of the system, support docker containers, better testing and QA, and geographic redundancy. From a migration perspective, we had a goal that there would be no downtime for access to our front end applications, using the most current data and media, and we would not go back to our previous implementation (even though we were prepared for it).

The first step in the migration was to identify the areas of our premised-based application that were risks to our ability to scale. We then looked at what services within the AWS platform would be able to help us solve these problems. We then took the opportunity of the AWS free tier to create new EC2 instances that used these services, and were able to create a working prototype that we were able to increase instances sizes on to perform load tests and compare with our current system. While we wanted to limit the code changes required, we were not willing to sacrifice the benefits of the cloud to do so. Therefore, our changes centered around moving settings into config files, managing sessions, user management, and caching.

The changes to the server infrastructure were much more extensive. Amazon's breadth of services allowed us to take advantage of several key features that not only reduced the complexity of the migration, but provided immediate benefit to our environment and our ability to manage it. While most people think about the performance that end users will gain from moving to the cloud, one of the additional benefits it provides is the ability for more fault tolerant environments using the combination of scaling policies and managed services.

Our final architecture consisted of the following services:

- Elastic File System to store over 1 TB of imagery and shared access across all of our EC2 instances, across several regions

- Application Load Balancers to manage the routing to our EC2 Instances and Docker Containers

- Elastic Load Balancers to manage our PHP applications

- Target Groups, Load Balancers, Launch Configurations and Scaling Groups to manage the server farm capacity

- Cloudwatch Alerts and Scaling Policies to trigger server additions or deletions

- Cloudfront for Edge Content delivery

- Certificate Manager to handle SSL Certificates for Load Balancers

- RDS for our managed database

- Route53 for DNS management

Once our architecture was established, we performed a series of load tests to determine the proper size of our instances. When that was complete, the task of performing the data migration with no loss of performance was set to begin.

Lift and Shift

Most aspects of our system can be transferred within minutes or hours, however, the amount of user content in the form of pictures, VR Panoramas, videos and audio was close to 1 TB of data. Although we have backups, we needed to ensure that all of our data transferred. For this, we used rsync to transfer the data. We would perform the operation in a series of “sweeps” with the data, each pass having a smaller set of changes, until we could transfer new data in the appropriate time frame. Once our maintenance window was activated with our clients, we were able to easily migrate the database, and we performed our final rsync of data.

For both our PHP Code base and our Docker Containers, our lift and shift strategy was to create our own AMI’s with the EFS drive mounted, and manage the applications with our current processes. Though not the most efficient and cost effective use of the platform, it did provide us with the reliability and performance we expected.

We have some special use cases regarding access to our maps, with some of our clients using a CNAME to map to our server, as well as SSL access. For the lift and shift, we created load balancers for each CNAME client, and use the Certificate Manager to handle SSL authentication at the load balancer. All of our Classic Load Balancers used the same auto scaling groups, but allowed us to give unique URLs for our clients CNAME implementations. We migrated our clients with CNAMEs onto the load balanced system first, followed by our own DNS entries.

Post Production Launch

While our migration was successful, we did need to spend some time understanding the proper metrics needed for your auto scaling groups to function properly. For our PHP codebase, we use a Classic Load Balancer, while our Docker Containers run under an Application Load Balancer (not managed by ECS). In terms of Scaling Metrics, the both have different metrics available to help you assist. The Classic Load Balancer Model for scaling is to add resources before errors escalate, while the ALB model is to add resources just as failures are beginning. In the classic Load balancer, as your surge queue rises, you requests begin to take much longer to finish. In the dynamic use of our application these delays slow application performance, and could potentially get lost as the client application has moved on. The Application Load Balancer takes advantage of the nature of the network protocols to fail immediately, and allow the browser to make another request (and allow for the server to launch).

Using CloudWatch, we built a series of metrics based on using the Surge Queue, which measures the number of requests that are queued in the Load Balancer waiting for a server to handle the request. As this number rises, it’s a good determination that more servers are needed. We set a metric to add servers to the load balancer when this number goes above 50 for two consecutive measurements. Conversely, we have another policy that states to remove servers when the Surge Queue Length stays below 20 for four consecutive measurements. The ALB’s do not have a Surge Queue Length to measure. Instead, the corresponding metric is

The Rejected Connection Count, which measures the number of rejected connections by the load balancer. Because this is a “harder” failure than the surge queue, we act much quicker, if the rejected connection count > 0, we increase. When the rejected connection count is = 0 over a longer period of time, we decrease.

Year in Review

One year into our migration and, quite honestly, it’s hard to imagine a different way of doing things. Looking back at each goal we set, we succeeded at each one of them. From an end user experience, we can now scale on demand, providing a consistent load time across both networked and mobile devices, no matter the number of users accessing our system. We also have not had any downtime: once we found the correct instance size, created the proper alarms for our scaling policies and created deployment processes that take advantage of the cloud, we no longer need maintenance windows for application updates, and, for the most part, the system takes care of itself. While we still use this architecture for our CMS, as we develop our next generation applications, we are taking advantage of several more services, and are truly writing code for the cloud.

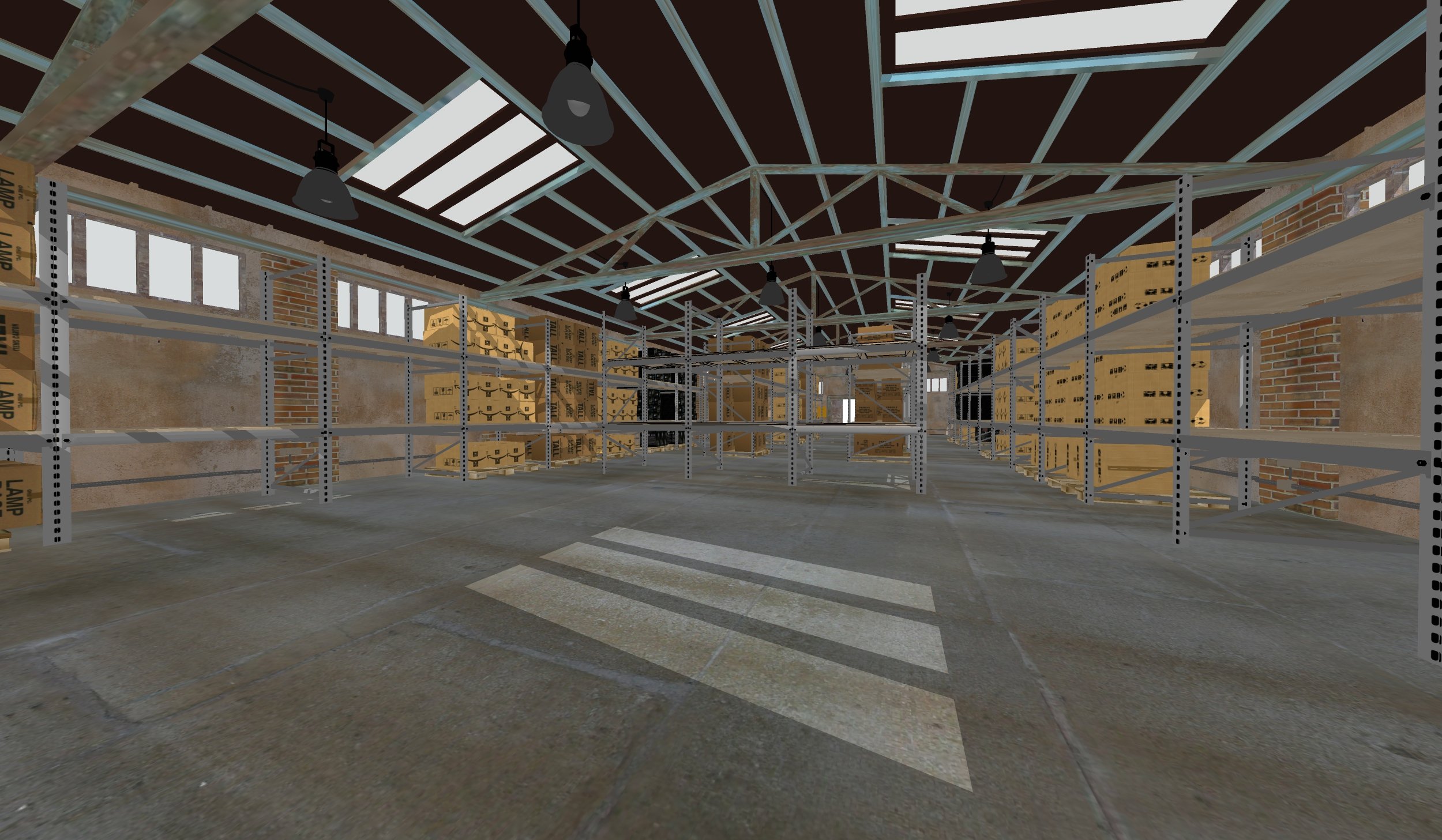

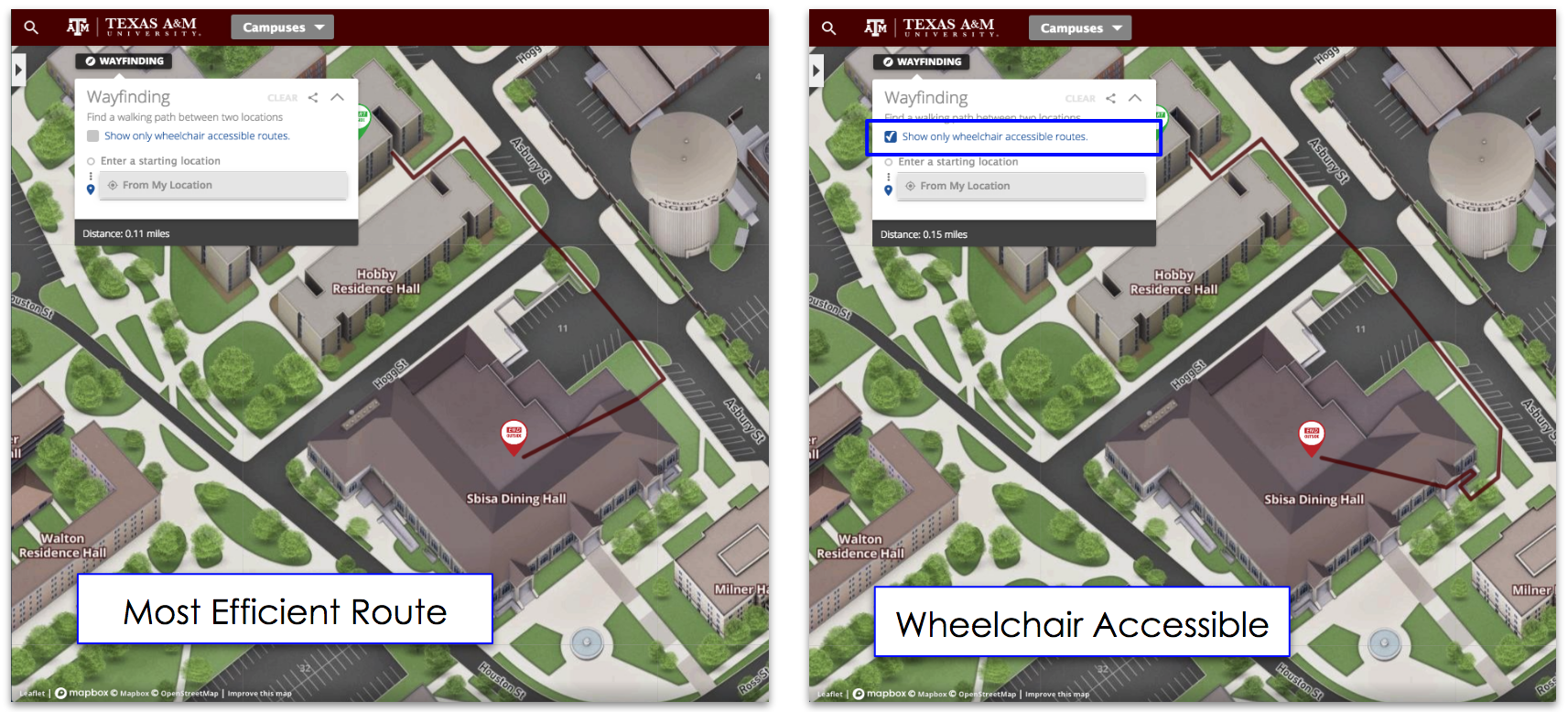

You may be wondering what we do at Concept3D: we create immersive online experiences with 3D modeling, interactive maps and VR-enabled virtual tour software. We blend these together to bring any physical location into an intuitive and navigable digital format, enabling our clients with applications like data visualization, wayfinding and VR. Our clients come from a variety of different industries, all of which have different needs from the Concept3D platform. Examples of our clients include data centers, healthcare and retirement facilities, convention centers and event venues. large commercial sites, resorts, hotels and theme parks, as well as universities, among others. Learn more and say hello at https://www.concept3D.com.

Topics: Interactive Virtual Experiences, Content Management System